During my time at the DFKI I have worked on interface and interaction design solutions for robotic applications, as well as participating in the respective academic research. The fields of research I was involved with included space robotics, robotic maritime exploration, and human-robot collaboration for rehabilitation.

Due to a non-disclosure agreement I can only show a small part of my work.

Robotics Innovation Center →

Years: 2018 - 2021

Keywords: Robotic Teleoperation, VR, Eye-Tracking, Hand-Tracking, Unreal Engine, Unity, Leap-Motion, Academic Publication

The project focuses on assembly and installation of infrastructure for space applications by humans and robots either autonomously or in cooperation. The cooperation follows the concept of "sliding autonomy" where robots can work either autonomously e.g. to support on-site astronauts or be teleoperated from a distance by an

operator using an exoskeleton to translate body movements to the robot.

My task was to create interface design principles for a virtual reality interface that operators use to

control

robotic systems, access on-site information and immerse themselves in a virtual representation of the

environment that the robot captures from its surrounding.

Due to the complex nature of such an endeavour the interface design had to prioritize unobtrusiveness while

containing a wide array of functionalities as well as being dynamically adaptable to unforeseen tasks and

environments.

Project

TransFit →

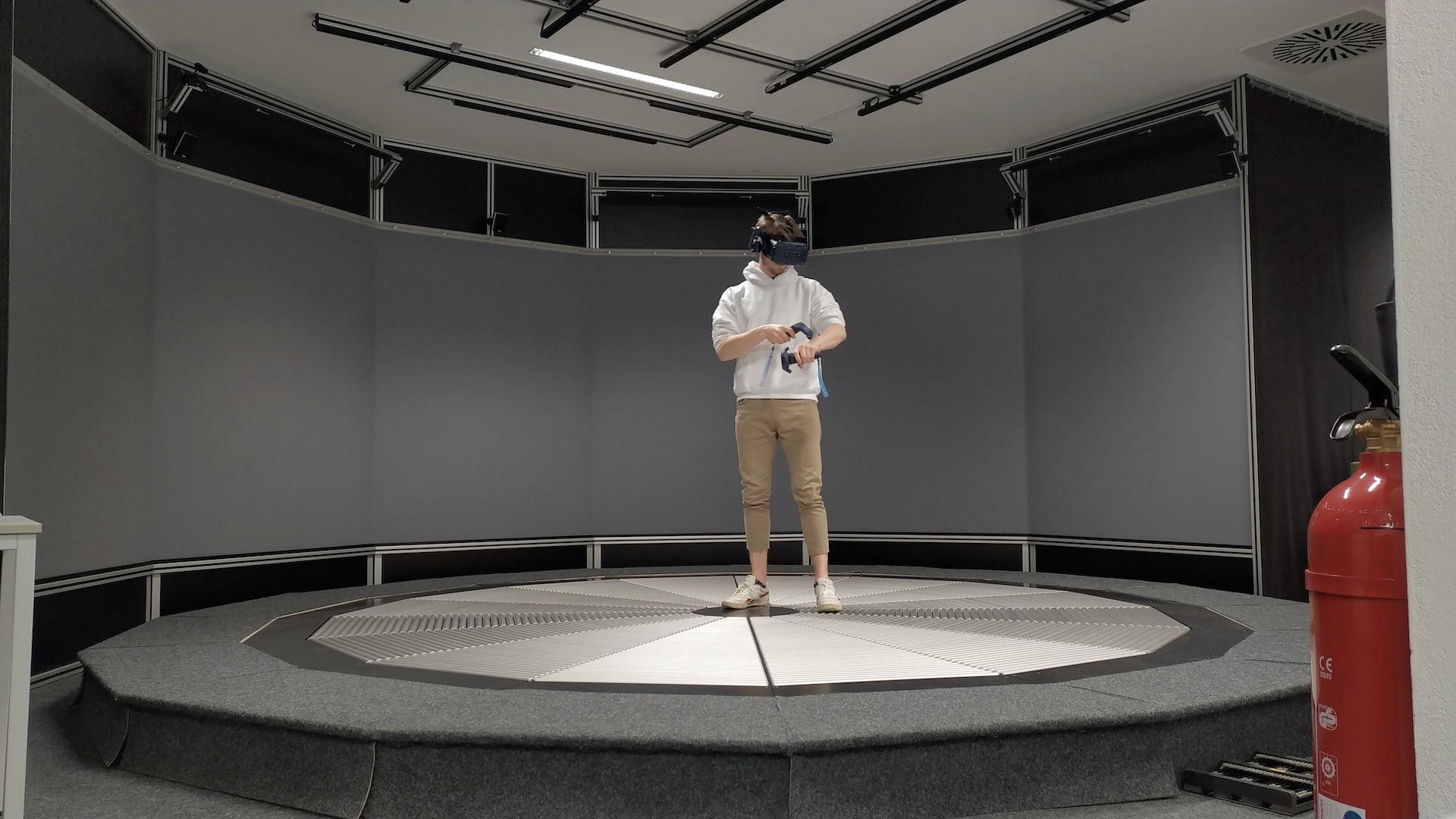

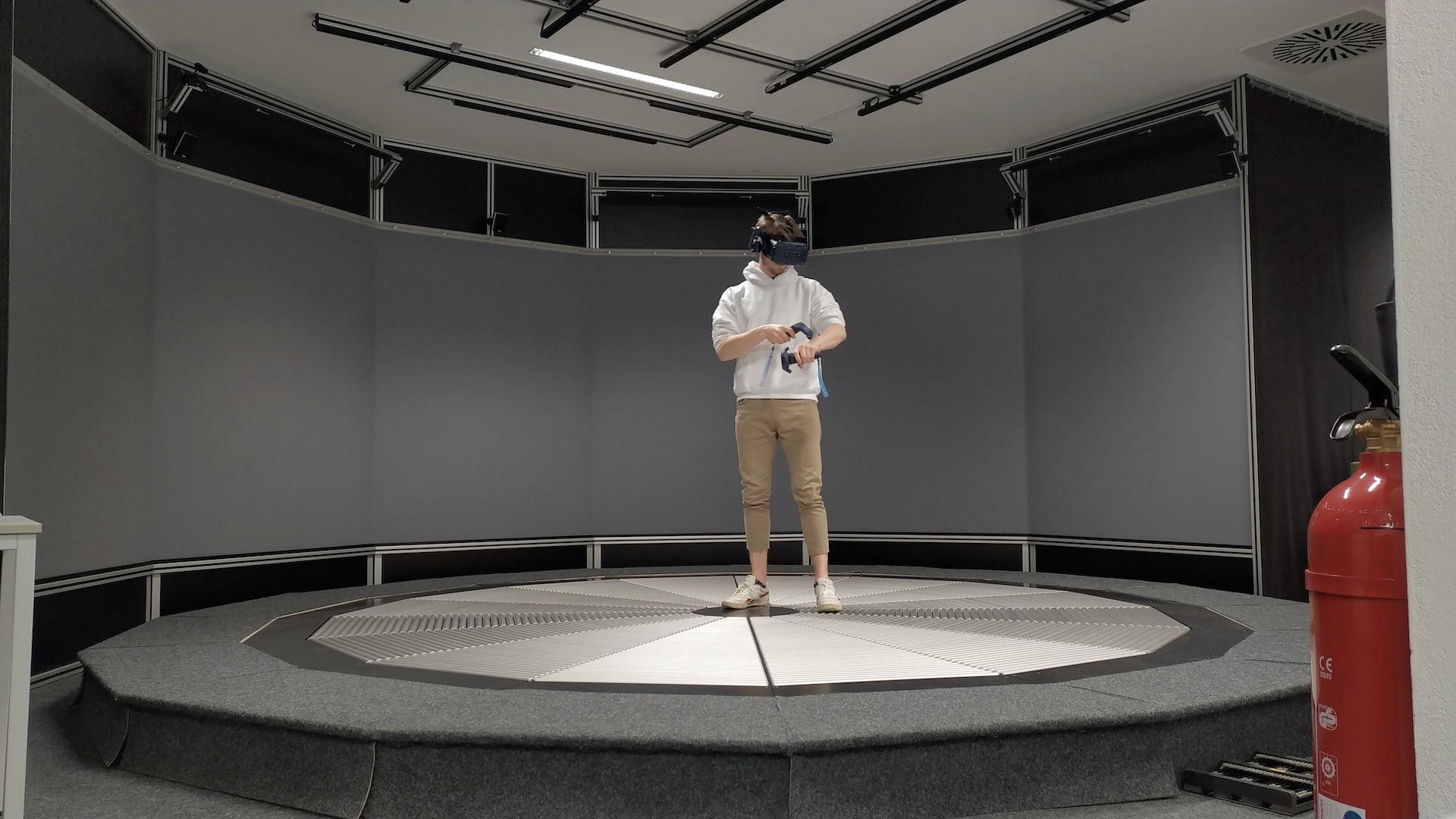

That's me using an omnidirectional treadmill to freely move around a virtual reality environment that I created for project TransFit to serve as a teleoperation interface for human-robot collaboration on the moon. The exoskeleton from the Recupera REHA Project → is used to teleoperate the humanoid robotic system on the site

Authors: Luka Jacke, Michael Maurus, Elsa Andrea Kirchner

Paper abstract:

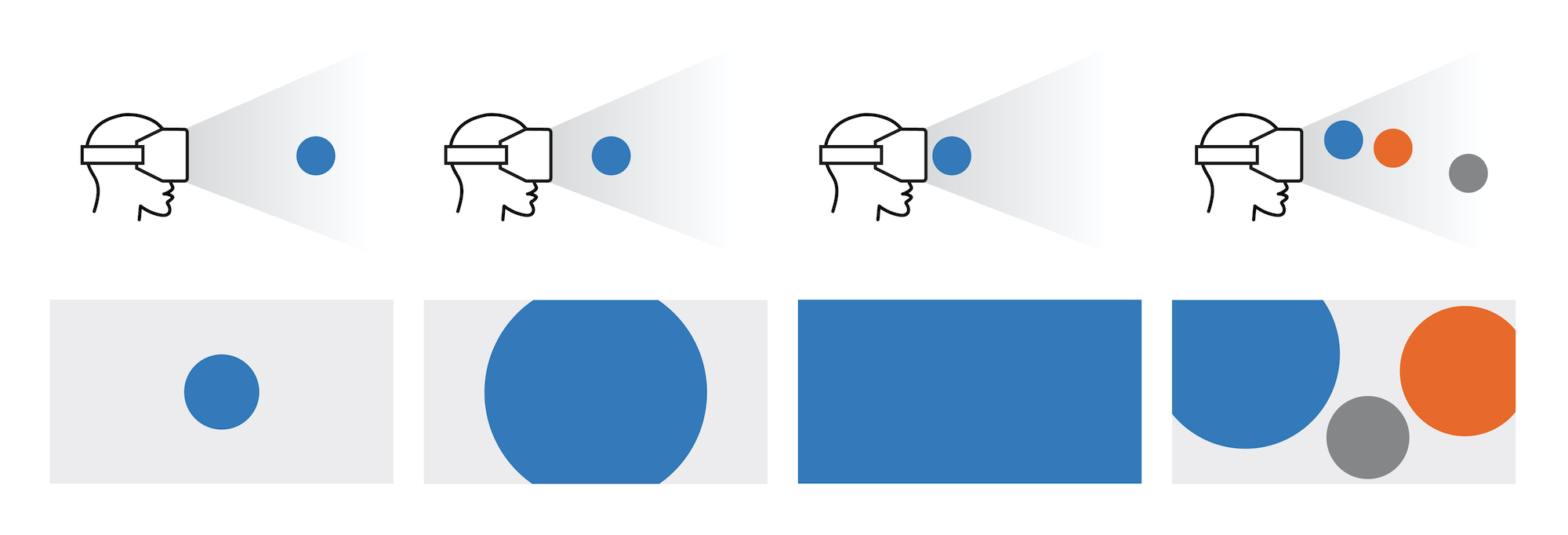

Graphical user

interfaces created for

scientific prototypes are often designed to support only a specific and well-defined use case. They often

use two-dimensional overlay buttons and panels in the view of the operator to cover needed functionalities.

For potentially unpredictable and more complex tasks, such interfaces often fall short of the ability to

scale properly with the larger amount of information that needs to be processed by the user. Simply

transferring this approach to more complex use-cases likely introduces visual clutter and leads to an

unnecessarily complicated interface navigation that reduces accessibility and potentially overwhelms users.

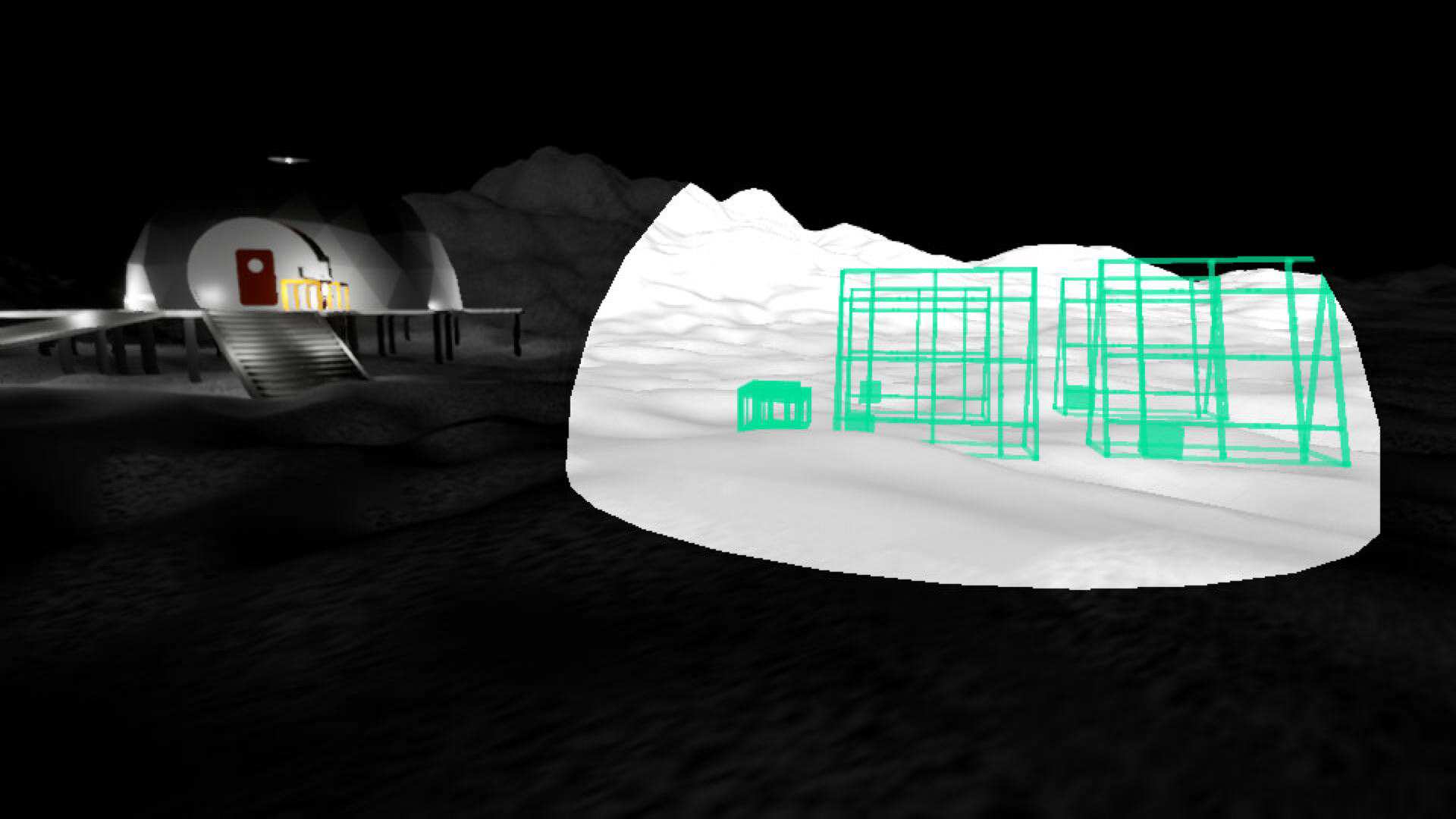

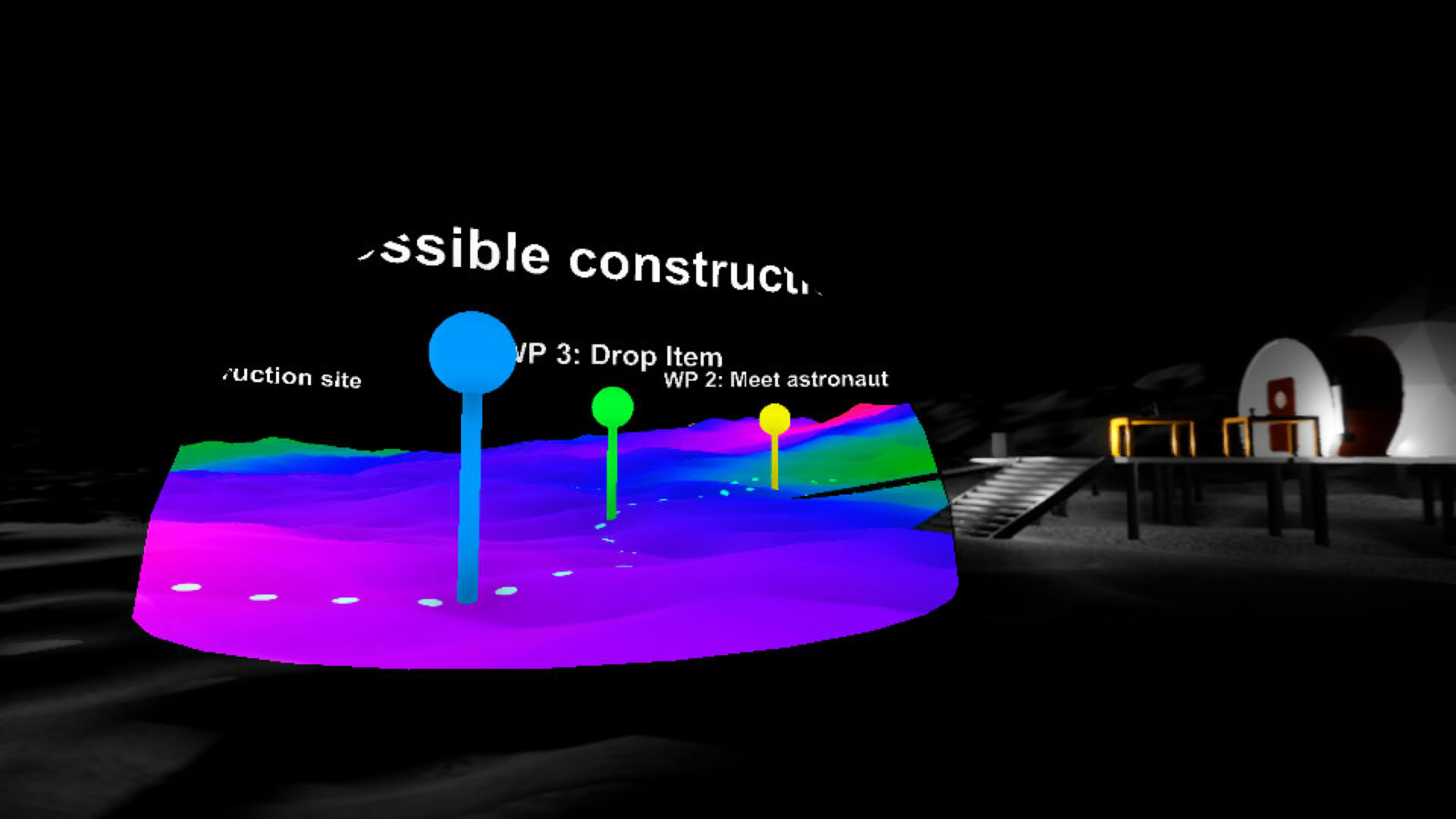

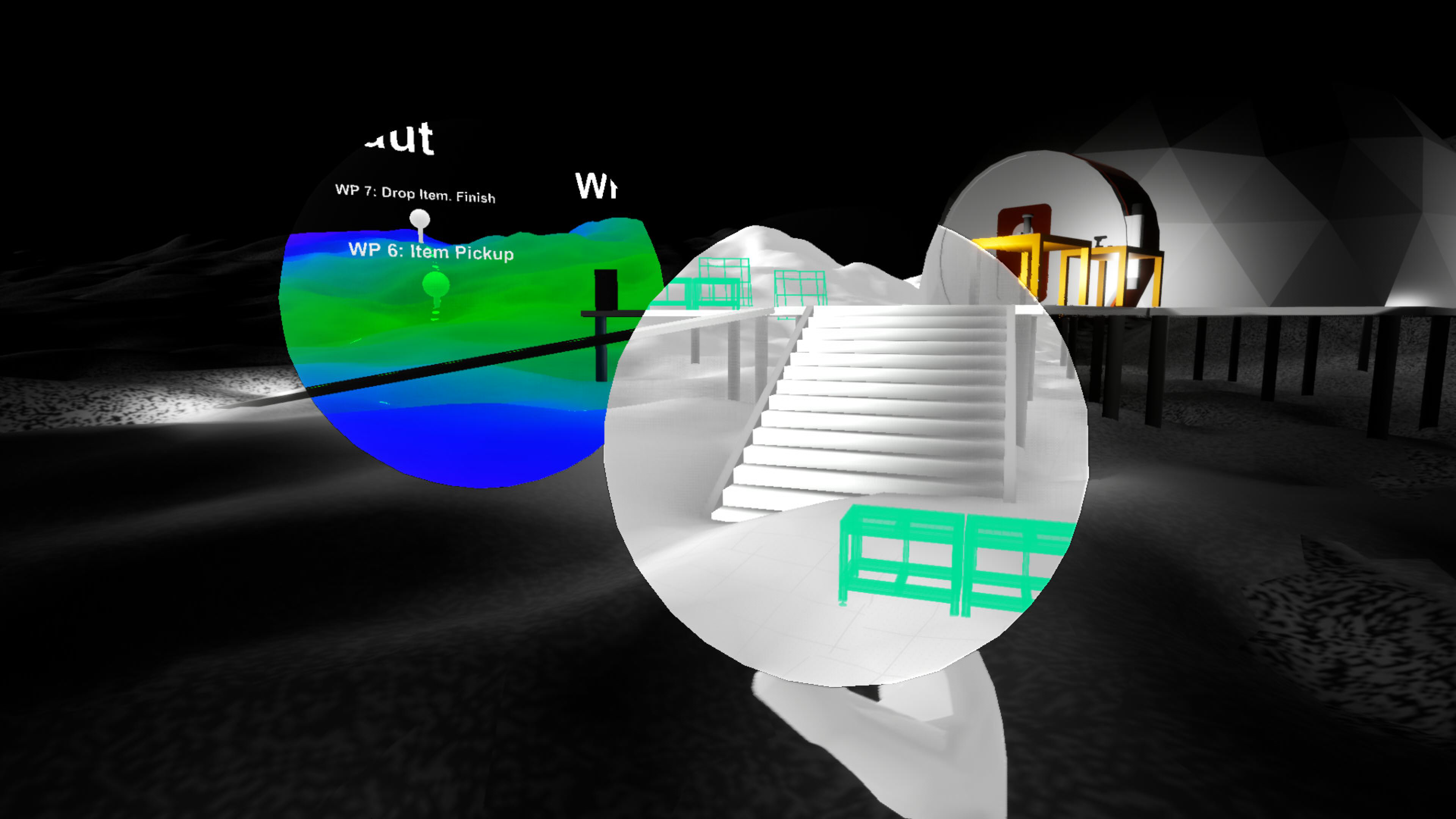

In this paper, we present a possible solution to this problem. In our proposed concept, information layers

can be accessed and displayed by placing an augmentation glass in front of the virtual camera. Depending on

the placement of the glass, the viewing area can cover only parts of the view or the entire scene. This also

makes it possible to use multiple glasses side by side. Furthermore, augmentation glasses can be placed into

the virtual environment for collaborative work. With this, our approach is flexible and can be adapted very

fast to changing demands.

Prototype made with: Unreal Engine, HTC Vive, Leap Motion

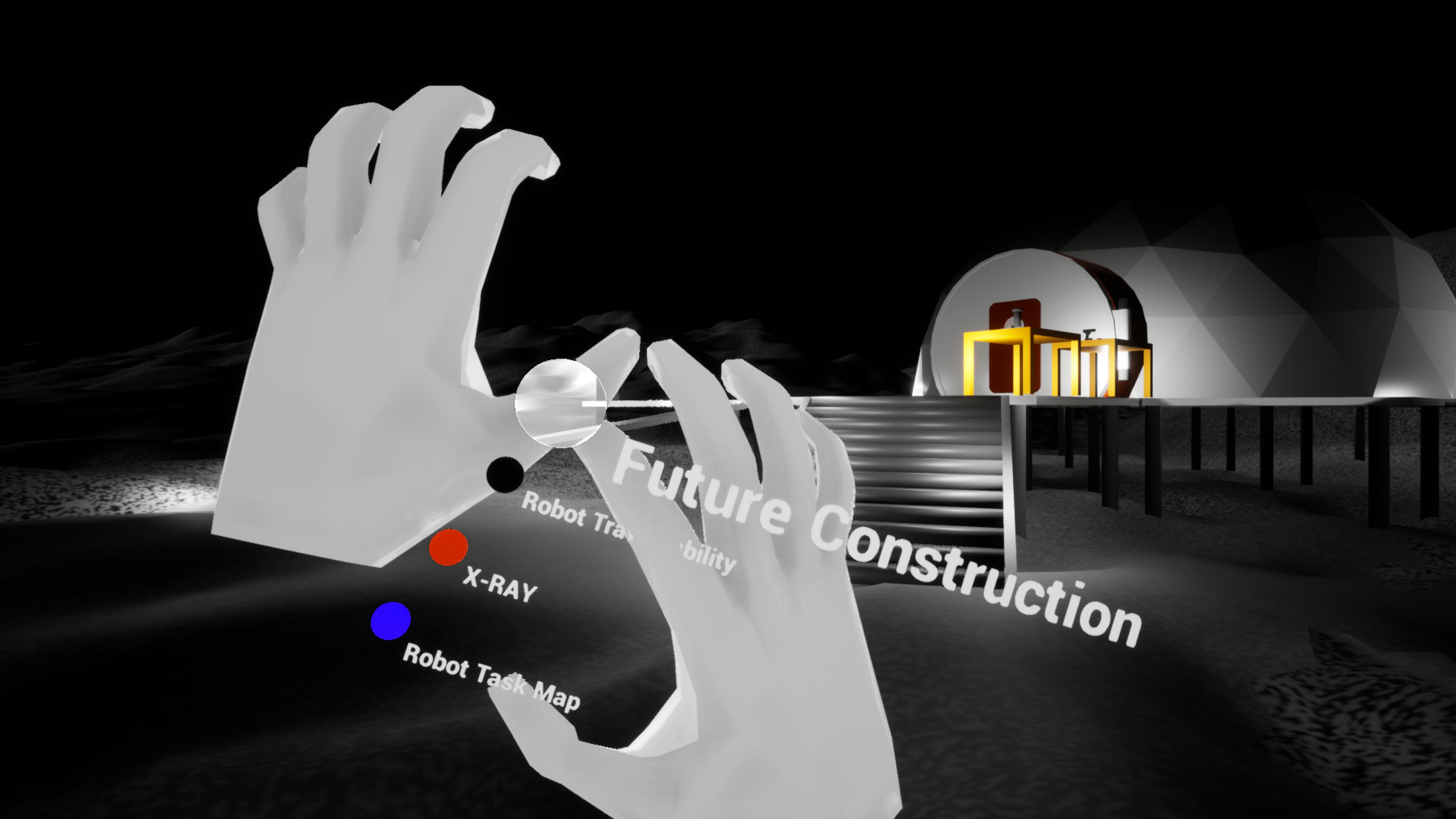

To access information layers, augmentation glasses can be drawn from a stack. This is supposed to work naturally - glasses would be picked up from the stack and simply dropped back after use. For the presentation of our first prototype, glasses can be selected with the index finger using a Leap Motion hand tracking controller.

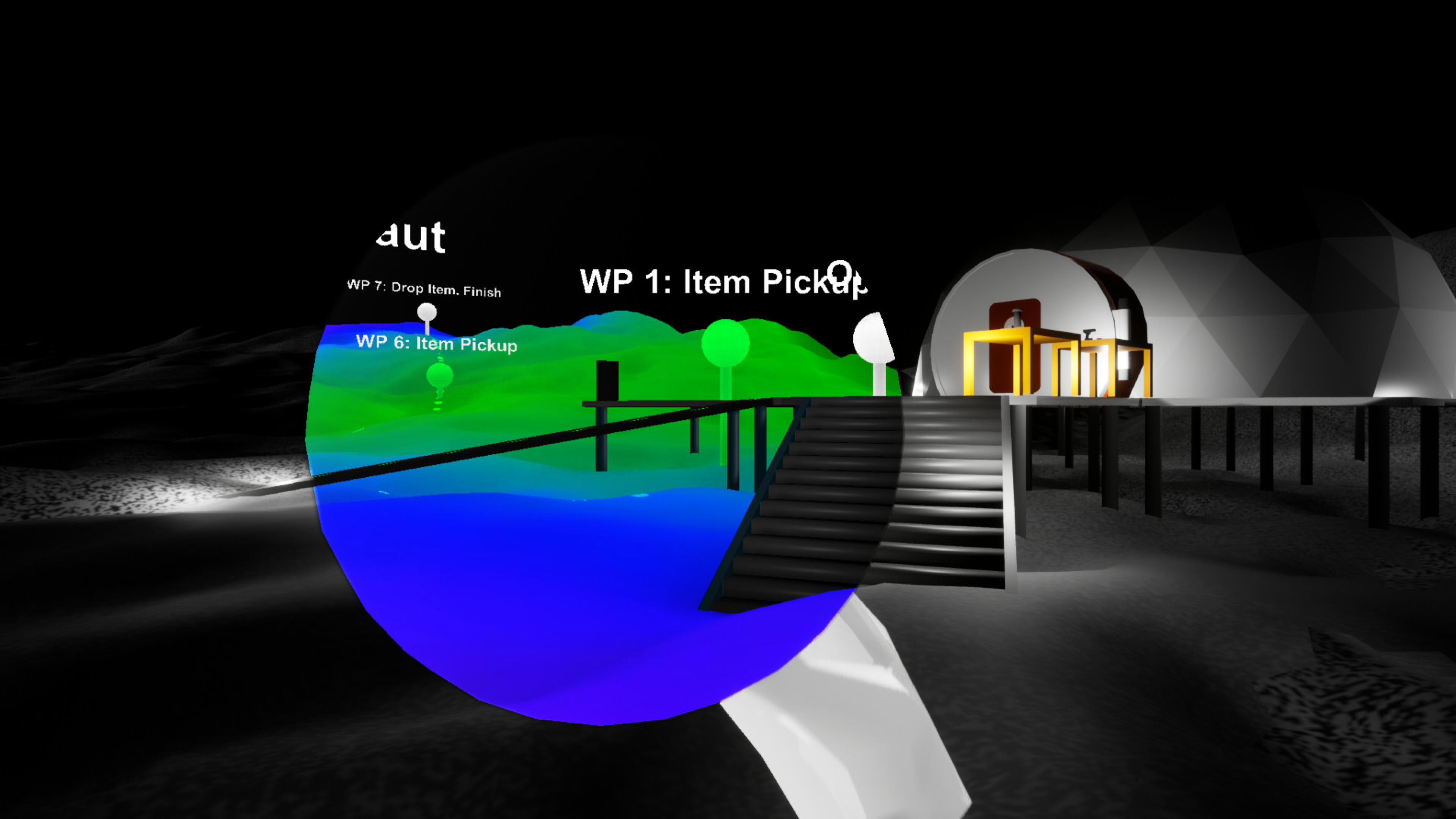

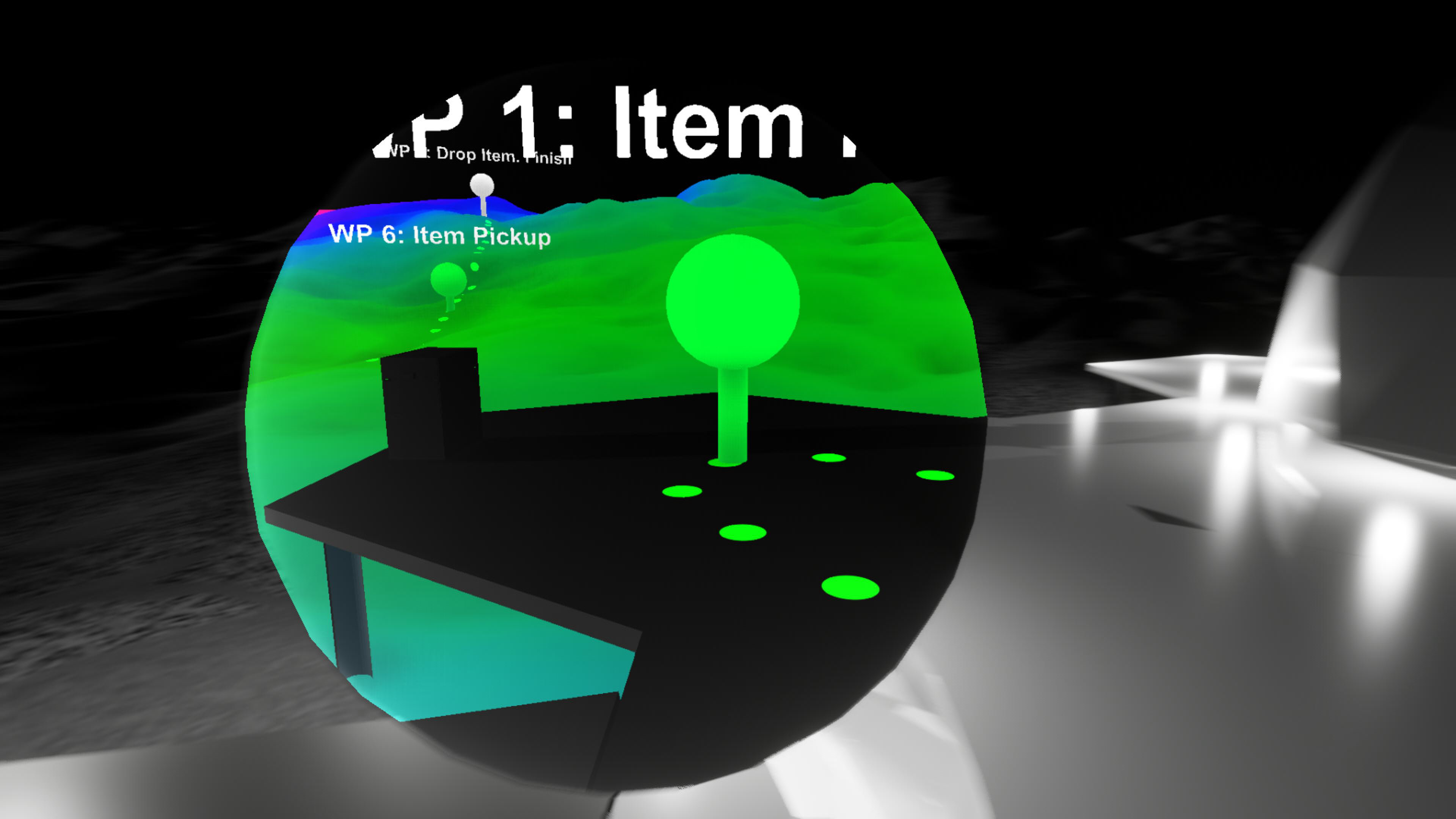

An augmentation glass is used to quickly access the robot task route and a traversability map

Multiple augmentation glasses can be used side by side

Augmentation glasses can be positioned into the virtual environment for collaborative use