The Hot Air Generator is an AI-driven, interactive media

installation that exposes the tendency of generative AI models to

hallucinate believable, yet non-factual data when prompted with

information they have not been explicitly trained on.

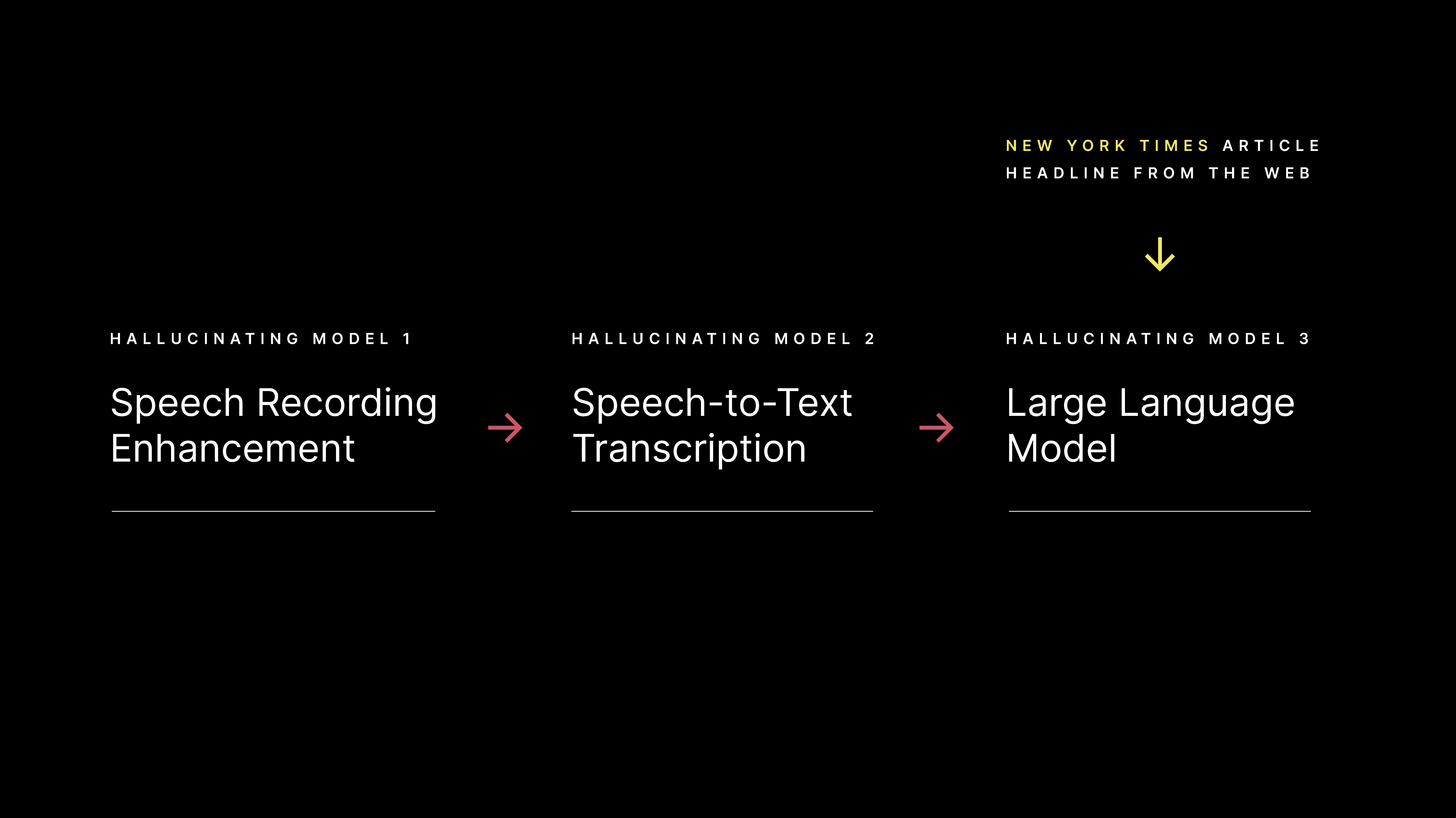

After

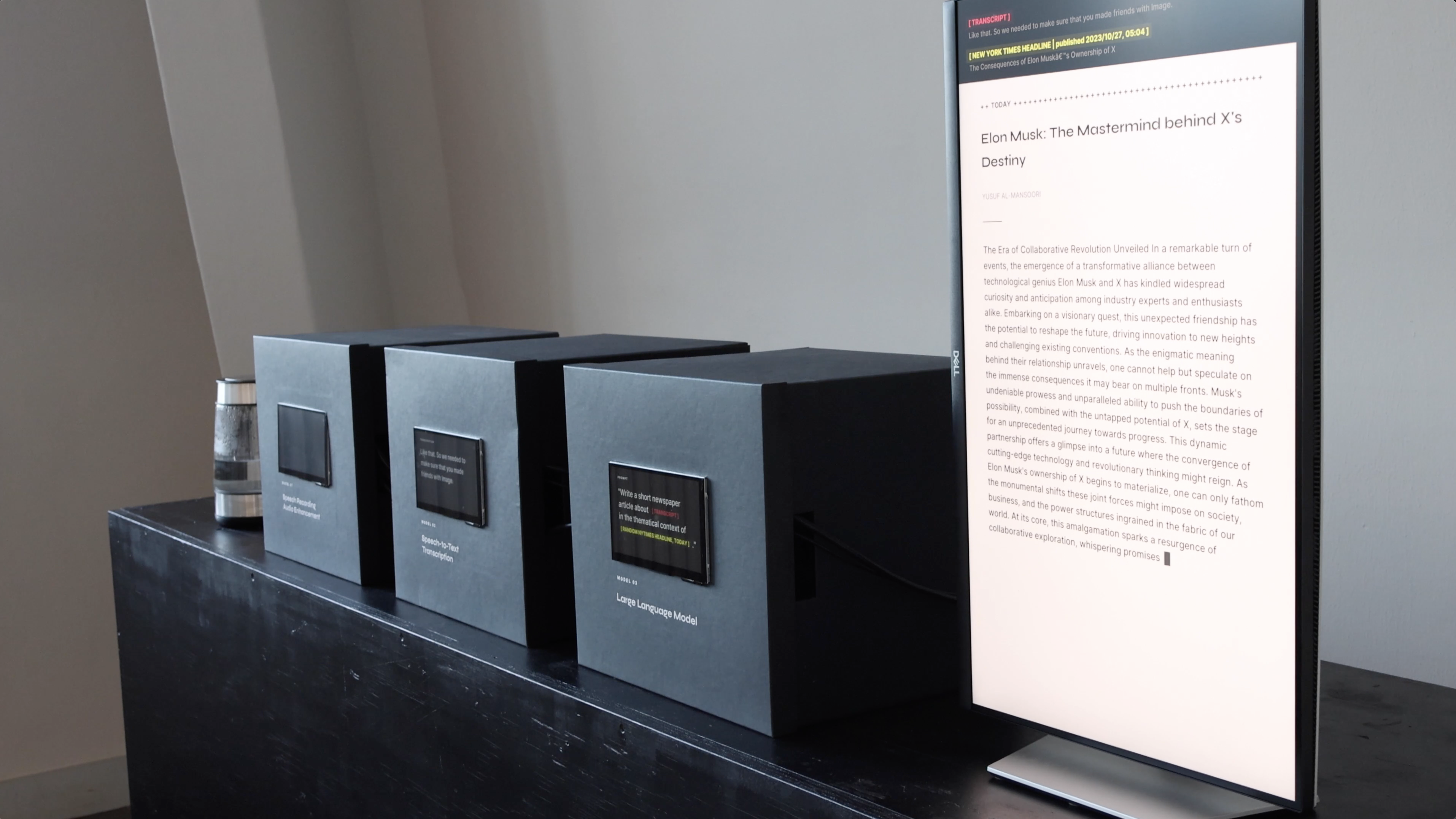

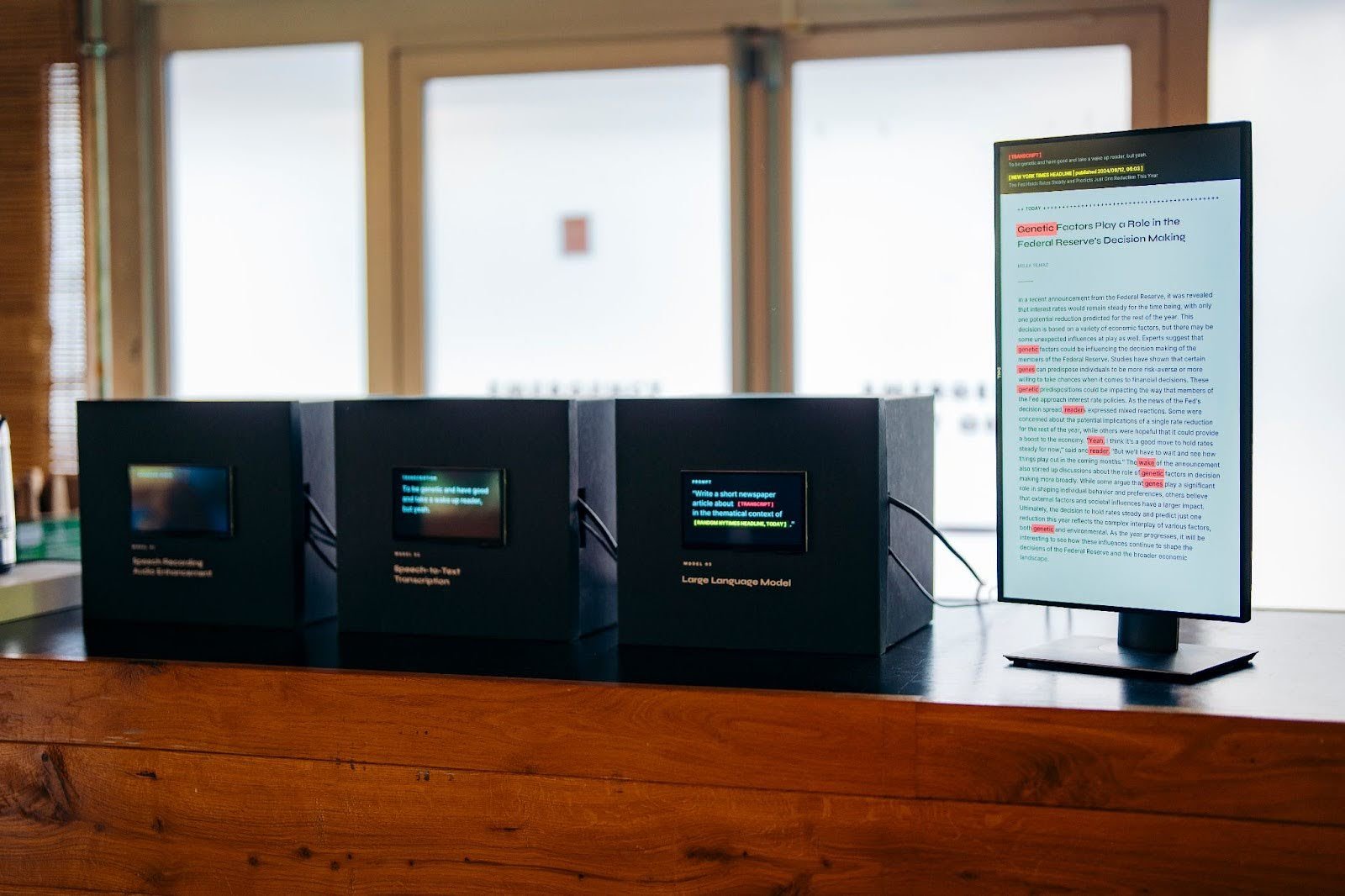

recording the sound of a steaming water kettle, sequentially chained

up AI models (speech audio enhancement model → speech-to-text model

→ large language model) process the outputs of their predecessors

and create a newspaper article from thin air.

Year: 2023

Tools used: Javascript, MQTT, Processing, Arduino, ChatGPT, The New York Times API, Various AI Services.

Exhibited at: Mozilla MozFest House Amsterdam 2024 and TRANSFORM 2023 Conference on AI, Sustainability, Art and Design, in Trier, Germany.

The chain of machine learning models that receive the output of their predecessors as inputs.

The speech audio enhancement model listens to the emitted sounds of the water kettle.

AI models can ever only output synthesized forms of the data they have been fed with in the training process. Hence, as the audio recording enhancement model has only "known"

human speech, it cannot generate anything else than that. This

leads to the model to confidentally interpret the dynamic noises of the water kettle

as human speech and thus "enhances" them into sounds that cleary resemble a human

voice, which are yet entirely incomprehensible and void of semantic meaning.

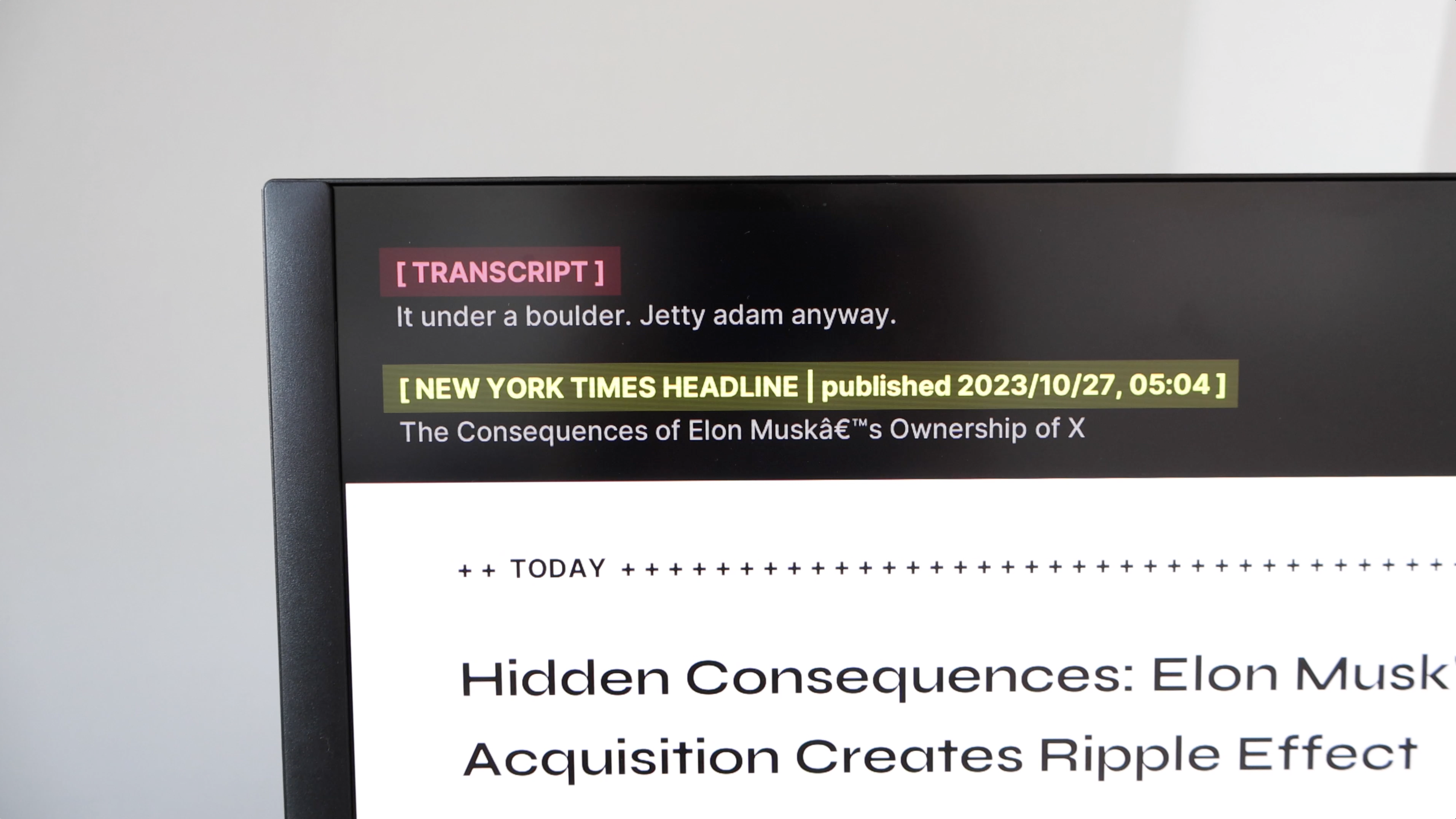

The mumbling output sound is then fed into the next AI - a

speech-to-text model where the same phenomenon occurs. Based on a

statistical analysis of what matches speech data it has been

originally trained on most, the model naturally "identifies" parts of the

audio output of the previous model as clear speech and transcribes it

into a non-sensical english sentence.

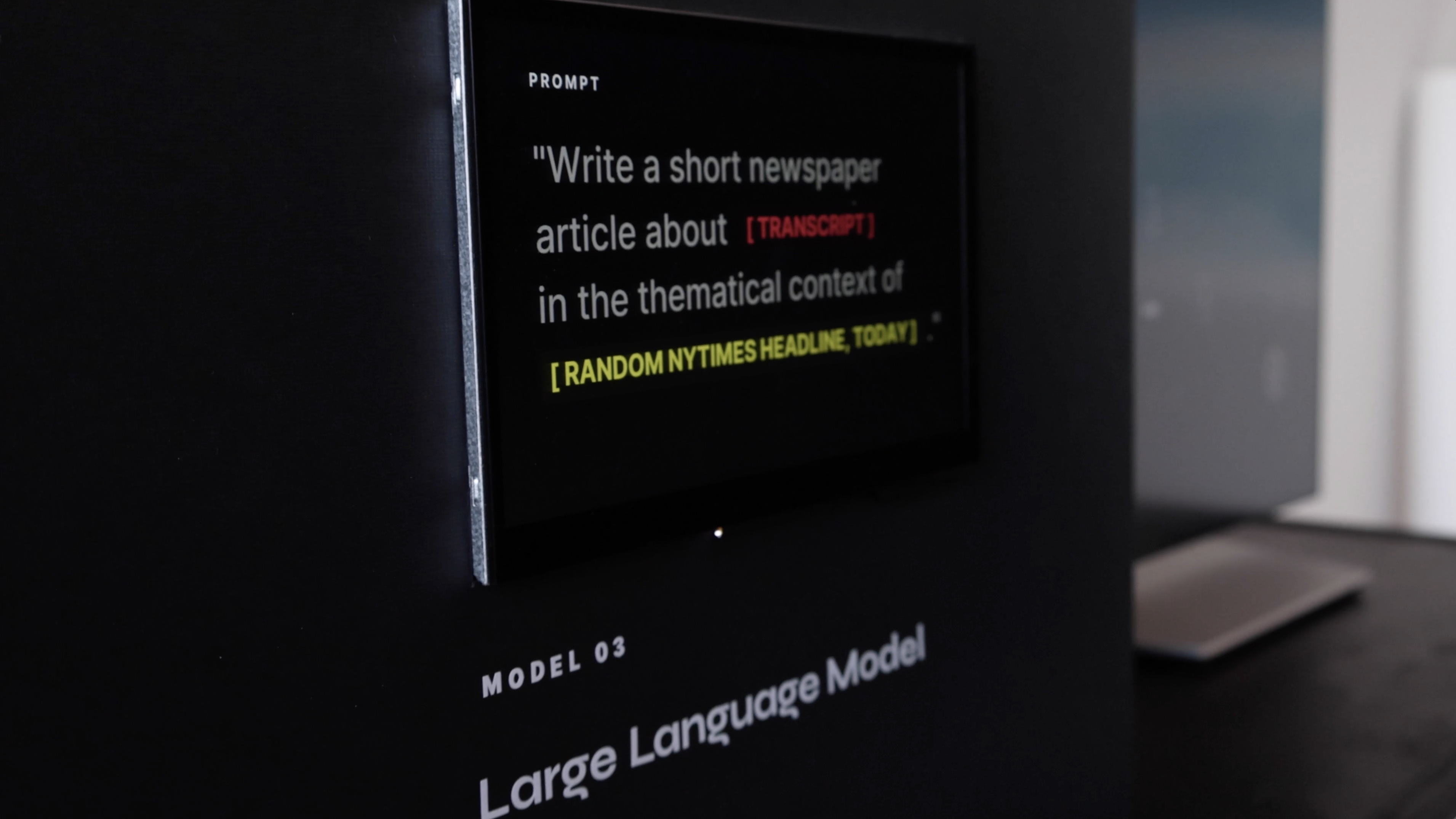

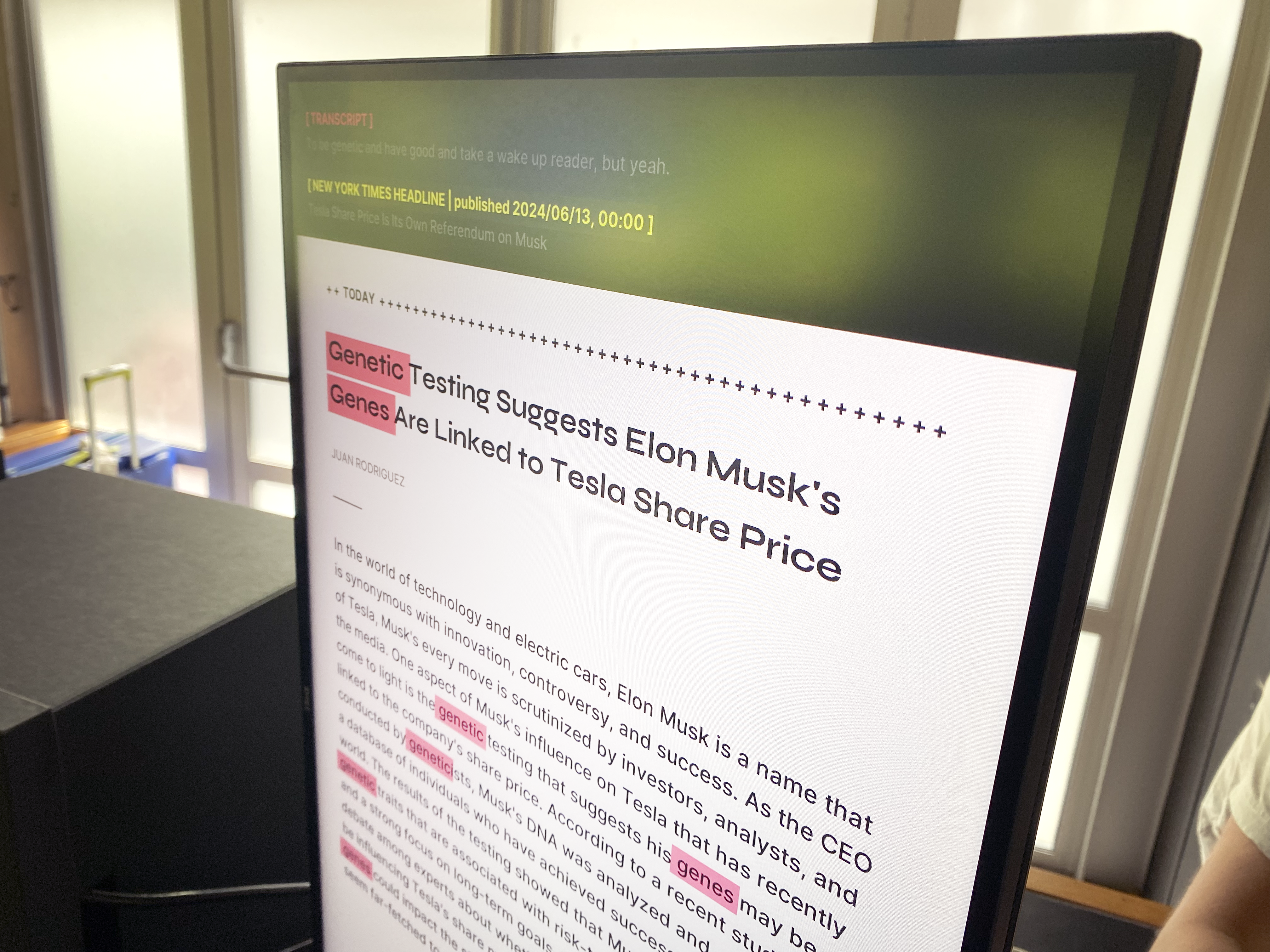

In a last step, this generated sentence is sent to a large language model. The model is prompted to combine the transcript with a headlinefrom the title page of the new york times at the given moment in time which is received via an API, and generate a newspaper article from it. Eventually, the model creates a text that is grammatically correct and semantically plausible, yet entirely fictional and non-factual.

Red markings in the generated text reveal the connection to the underlying hallucinated transcription the article is based on.